Intuition and Semantic Reasoning in AI

Intuition and Semantic Reasoning in AI

“An Experimental Analysis of Intuition and Semantic Reasoning in Linguistic Visualizations by Humans and AI (LLMs)”

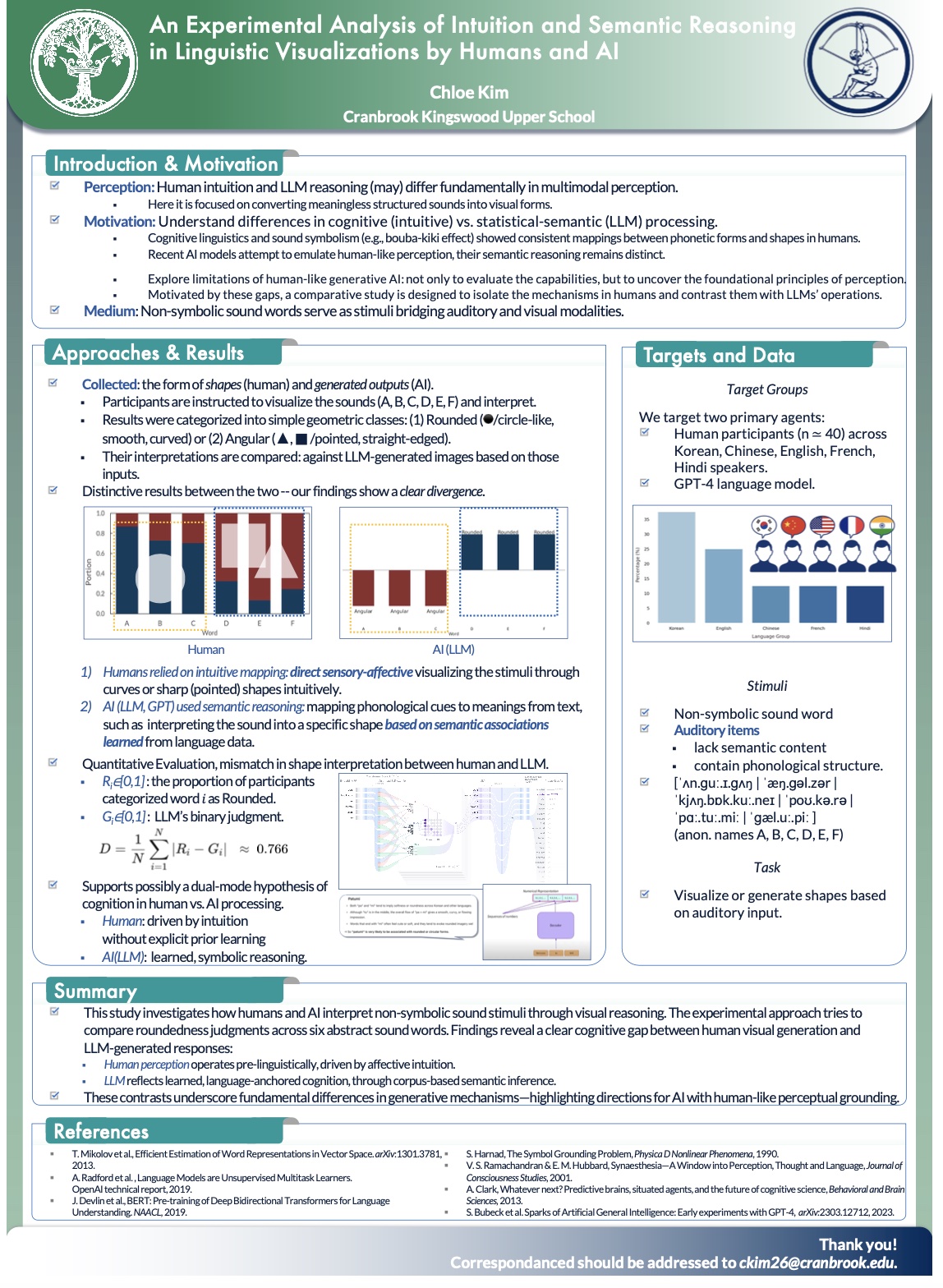

We experimentally analyze how association stimuli are processed through distinct cognitive mechanisms by humans and AI (large language models, LLMs) in visual generation tasks based on linguistic association. As stimuli, we employ nonsymbolic sound words (non-symbolic sounds), which represent meaningless but structured sound sequences. This study focuses on the contrast between human intuition and LLMs’ learned semantic reasoning. Humans tend to visualize ‘sound-associated words’ without prior learning, relying on immediate sensory and affective cues to produce intuitive forms—curves or pointed shapes. In contrast, LLMs such as GPT map these words onto shapes based on large-scale text-driven knowledge, using semantic structures and statistical patterns.

To verify this, we conduct experiments with both a diverse cross-linguistic and cross-cultural human participant group (n ≃ 40; including Korean, Chinese, English, French, and Hindi speakers) and an LLM (GPT-4). Shape categorization classifies the imagined (in the case of humans) and generated images (in the case of the LLM) into basic geometric forms such as circles, triangles, and rectangles. Results show that humans rely on pre-existing intuitive mappings between sound and shape, performing direct sensory-to-visual transfers without explicit learning. By contrast, LLM outputs (expression of shapes) are generated through prior semantic knowledge learned from language corpora, reflecting a meaning-driven reasoning process.

We identify a fundamental gap between human intuition-based learning and LLMs’ meaning-based learning. These findings enhance our understanding of multimodal perception and generative mechanisms in foundation models (fundamental AI models).